Activities of "yolier.galan@rockblast.cl"

- ABP Framework version: v6.0.1

- UI type: Angular

- DB provider: EF Core

- Tiered (MVC) or Identity Server Separated (Angular): yes

- Use microservice template: yes

- Exception message and stack trace:

web-gateway_e8dbcfd8-3]: [15:23:09 INF] CORS policy execution successful.

[web-gateway_e8dbcfd8-3]: [15:23:09 INF] Request finished HTTP/1.1 OPTIONS https://localhost:44325/api/file-management/file-descriptor/upload?directoryId=3a080000-9d8c-c259-9807-98257c036810 - - - 204 - - 0.3812ms

[web-gateway_e8dbcfd8-3]: [15:23:09 INF] Request starting HTTP/1.1 POST https://localhost:44325/api/file-management/file-descriptor/upload?directoryId=3a080000-9d8c-c259-9807-98257c036810 multipart/form-data;+boundary=---------------------------239247365239037117843134239485 3045796267

[web-gateway_e8dbcfd8-3]: [15:23:09 INF] CORS policy execution successful.

[web-gateway_e8dbcfd8-3]: [15:23:09 DBG] requestId: 0HMMOCVPSLJTM:00000007, previousRequestId: no previous request id, message: ocelot pipeline started

[web-gateway_e8dbcfd8-3]: [15:23:09 DBG] requestId: 0HMMOCVPSLJTM:00000007, previousRequestId: no previous request id, message: Upstream url path is /api/file-management/file-descriptor/upload

[web-gateway_e8dbcfd8-3]: [15:23:09 DBG] requestId: 0HMMOCVPSLJTM:00000007, previousRequestId: no previous request id, message: downstream templates are /api/file-management/{everything}

[web-gateway_e8dbcfd8-3]: [15:23:17 WRN] requestId: 0HMMOCVPSLJTM:00000007, previousRequestId: no previous request id, message: Error Code: UnmappableRequestError Message: Error when parsing incoming request, exception: System.IO.IOException: Stream was too long.

[web-gateway_e8dbcfd8-3]: at System.IO.MemoryStream.Write(Byte[] buffer, Int32 offset, Int32 count)

[web-gateway_e8dbcfd8-3]: at System.IO.MemoryStream.WriteAsync(ReadOnlyMemory`1 buffer, CancellationToken cancellationToken)

[web-gateway_e8dbcfd8-3]: --- End of stack trace from previous location ---

[web-gateway_e8dbcfd8-3]: at System.IO.Pipelines.PipeReader.<CopyToAsync>g__Awaited|15_1(ValueTask writeTask)

[web-gateway_e8dbcfd8-3]: at System.IO.Pipelines.PipeReader.CopyToAsyncCore[TStream](TStream destination, Func`4 writeAsync, CancellationToken cancellationToken)

[web-gateway_e8dbcfd8-3]: at Ocelot.Request.Mapper.RequestMapper.ToByteArray(Stream stream)

[web-gateway_e8dbcfd8-3]: at Ocelot.Request.Mapper.RequestMapper.MapContent(HttpRequest request)

[web-gateway_e8dbcfd8-3]: at Ocelot.Request.Mapper.RequestMapper.Map(HttpRequest request, DownstreamRoute downstreamRoute) errors found in ResponderMiddleware. Setting error response for request path:/api/file-management/file-descriptor/upload, request method: POST

[web-gateway_e8dbcfd8-3]: [15:23:17 DBG] requestId: 0HMMOCVPSLJTM:00000007, previousRequestId: no previous request id, message: ocelot pipeline finished

[web-gateway_e8dbcfd8-3]: [15:23:17 INF] Request finished HTTP/1.1 POST https://localhost:44325/api/file-management/file-descriptor/upload?directoryId=3a080000-9d8c-c259-9807-98257c036810 multipart/form-data;+boundary=---------------------------239247365239037117843134239485 3045796267 - 404 0 - 8055.6041ms

[web-gateway_e8dbcfd8-3]: [15:23:18 INF] Connection id "0HMMOCVPSLJTM", Request id "0HMMOCVPSLJTM:00000007": the application completed without reading the entire request body.

- Steps to reproduce the issue:"

0.- Use the microservices template 1.- Create a microservices Main 2.- Add the FileManagement module to Main microservices 3.- Configure Kestrel in gateways/Web Module (Program.cs file) to allow uploading files larger than 28mb.

// Prevent Ocelot error when loading a file with size > 28 mb

builder.WebHost.UseKestrel(options => {

options.Limits.MaxRequestBodySize = long.MaxValue;

});

4.- Configure Kestrel in services/Main Module (Program.cs file) to allow uploading files larger than 28mb.

// Prevent Ocelot error when loading a file with size > 28 mb

builder.WebHost.UseKestrel(options => {

options.Limits.MaxRequestBodySize = long.MaxValue;

});

5.- Attempts to upload files larger than 2.0 GB

Question:

In case the above bug cannot be solved in the short term, how to avoid CORS from Angular when trying to access directly to the main microservice

- ABP Framework version: v4.4.2

- UI type: Angular

- DB provider: EF Core (Mysql)

- Tiered (MVC) or Identity Server Separated (Angular): yes

Context:

Currently we have the FileManagemente configured to upload large files (1 - 5 GB) to AWS S3 with the Volo.Abp.BlobStoring.Aws, and we have noticed that when uploading files of 300 MB or more to the abp server, the file is first load to memory / disk and then push to AWS S3.

This creates a bottleneck for us, since we constantly need to upload files of approximately 1 to 5 GB.

How can I configure FileManagemente to upload to S3 without having to wait for the entire file to be server-side?

- Steps to reproduce the issue:"

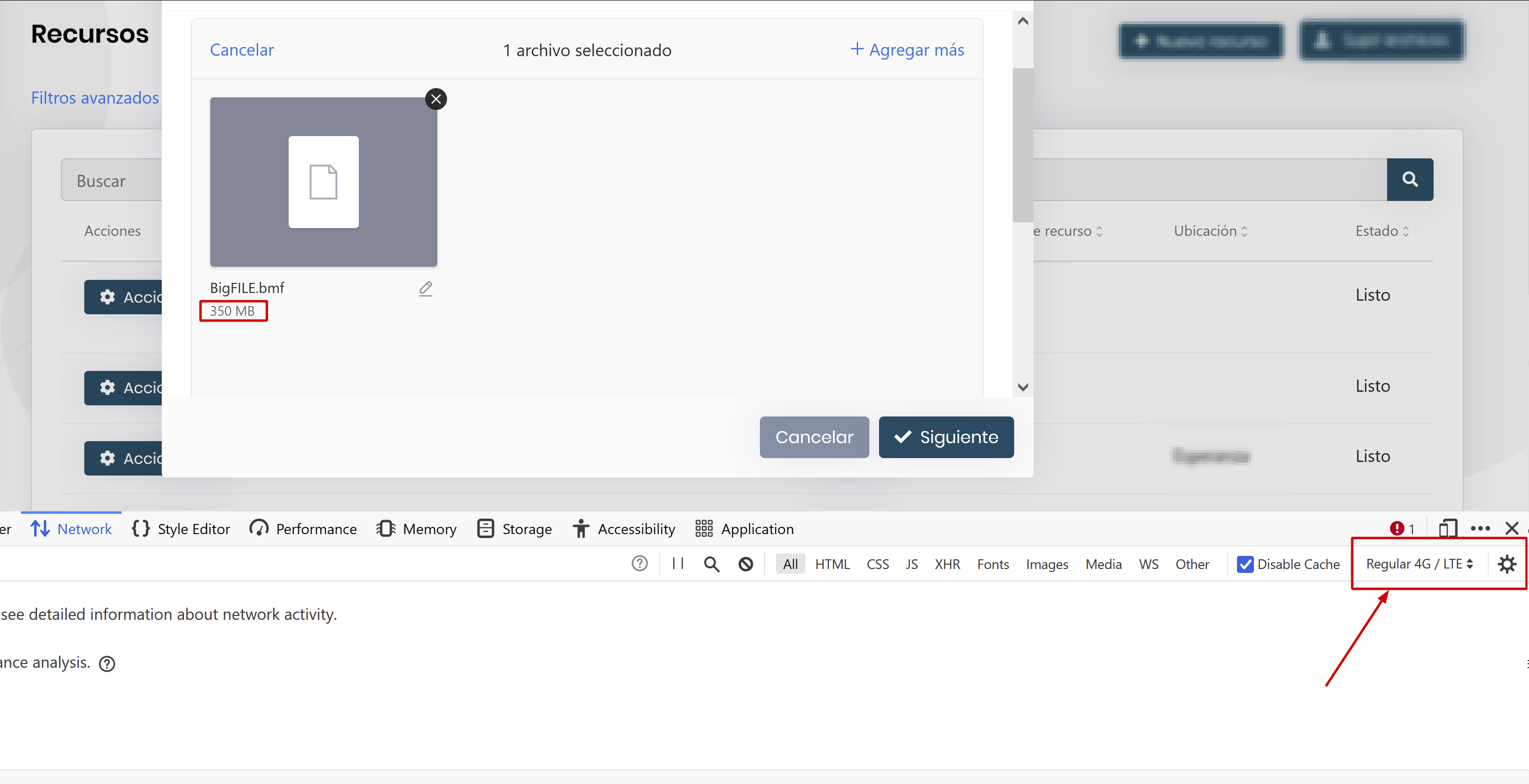

1.- If test in local environment, configure the network in the browser, as in the following image

2.- Apply traces in BlobStoring SaveAsync Function 3.- Upload the file

- Current Configurations

ApplicationModule:

Configure<AbpBlobStoringOptions>(options =>

{

options.Containers.ConfigureAll((containerName, containerConfiguration) =>

{

containerConfiguration.UseAws(aws =>

{

aws.AccessKeyId = accessKeyId;

aws.SecretAccessKey = secretAccessKey;

aws.Region = region;

aws.CreateContainerIfNotExists = false;

aws.ContainerName = bucket;

});

containerConfiguration.ProviderType = typeof(MyAwsBlobProvider);

containerConfiguration.IsMultiTenant = true;

});

});

Configure<AbpBlobStoringOptions>(options =>

{

options.Containers.ConfigureAll((containerName, containerConfiguration) =>

{

containerConfiguration.UseAws(aws =>

{

aws.AccessKeyId = accessKeyId;

aws.SecretAccessKey = secretAccessKey;

aws.Region = region;

aws.CreateContainerIfNotExists = false;

aws.ContainerName = bucket;

});

containerConfiguration.ProviderType = typeof(MyAwsBlobProvider);

containerConfiguration.IsMultiTenant = true;

});

});

Sample Class for trace the upload of the File:

using System;

using System.Threading.Tasks;

using Amazon.S3.Transfer;

using Microsoft.Extensions.Logging;

using Microsoft.Extensions.Logging.Abstractions;

using Volo.Abp.BlobStoring;

using Volo.Abp.BlobStoring.Aws;

namespace MyApp.Container

{

public class MyAwsBlobProvider : AwsBlobProvider

{

public ILogger<MyAwsBlobProvider> Logger { get; set; }

public MyAwsBlobProvider(

IAwsBlobNameCalculator awsBlobNameCalculator,

IAmazonS3ClientFactory amazonS3ClientFactory,

IBlobNormalizeNamingService blobNormalizeNamingService)

: base(awsBlobNameCalculator, amazonS3ClientFactory, blobNormalizeNamingService)

{

Logger = NullLogger<MyAwsBlobProvider>.Instance;

}

public override async Task SaveAsync(BlobProviderSaveArgs args)

{

var blobName = AwsBlobNameCalculator.Calculate(args);

var configuration = args.Configuration.GetAwsConfiguration();

var containerName = GetContainerName(args);

Logger.LogInformation($"[MyAwsBlobProvider::SaveAsync] FileName=${blobName} Bucket=${containerName}");

using (var amazonS3Client = await GetAmazonS3Client(args))

{

if (!args.OverrideExisting && await BlobExistsAsync(amazonS3Client, containerName, blobName))

{

throw new BlobAlreadyExistsException(

$"Saving BLOB '{args.BlobName}' does already exists in the container '{containerName}'! Set {nameof(args.OverrideExisting)} if it should be overwritten.");

}

if (configuration.CreateContainerIfNotExists)

{

await CreateContainerIfNotExists(amazonS3Client, containerName);

}

var fileTransferUtility = new TransferUtility(amazonS3Client);

// SOURCE: https://docs.aws.amazon.com/AmazonS3/latest/userguide/mpu-upload-object.html

var fileTransferUtilityRequest = new TransferUtilityUploadRequest

{

BucketName = containerName,

PartSize = 6 * (long)Math.Pow(2, 20), // 6 MB

Key = blobName,

InputStream = args.BlobStream,

AutoResetStreamPosition = true,

AutoCloseStream = true

};

fileTransferUtilityRequest.UploadProgressEvent += displayProgress;

await fileTransferUtility.UploadAsync(fileTransferUtilityRequest);

}

}

private void displayProgress(object sender, UploadProgressArgs args)

{

if (args.PercentDone % 10 == 0) {

Logger.LogInformation($"[MyAwsBlobProvider::SaveAsync] {args}");

}

}

}

}

Atte, Yolier